There is a wide range of opinions about most medical topics in veterinary medicine, and rarely sufficient evidence to definitively establish who’s right and who’s wrong. For better or worse, we have tremendous individual latitude in deciding what treatments to offer and in counseling our clients.

But is there ever a point where a vet is so vehemently opposed to so much of what constitutes modern veterinary medicine that they shouldn’t be allowed to hold a medical license? If someone reviles the rest of the profession, claims that our most common and well-established interventions are useless or harmful, and offers ONLY unproven or disproven alternative treatments, why should they be allowed to hold the same license and practice under the same terms as the rest of us? Is it misleading for animal owners to call oneself a veterinarian when one’s entire philosophy and practice inconsistent with the accepted approach of the veterinary profession? Are there truly absolutely no standards at all for what constitute legitimate veterinary medicine?

Of course, in reality there is virtually nothing in the way of a standard of care within veterinary medicine. Government seems uninterested in regulating the profession beyond policing drug abuse. And the institutions of organized veterinary medicine, such as the AVMA, have absolutely no interest in interfering with the sacred autonomy of individual veterinarians, regardless of what sort of treatments they employ. The AVMA notoriously refused to consider even the small step of acknowledging that homeopathy is a useless fraud, and it includes complementary and alternative medicine as part of the definition of veterinary medicine, despite being unable to define what it is. The general approach is that if something makes vets money, it is fine to offer regardless of the state of the scientific evidence.

However, there are some principles of law and ethics that have been articulated which the extreme anti-medicine vets seem to violate. For one, veterinarians are generally required by law to have a degree from an accredited school of veterinary medicine. The overwhelming majority of what is taught in such schools is science-based, conventional medicine. The purpose of such a requirement is to ensure the public is not harmed by practitioners who don’t understand science or scientific medicine and offer instead unscientific, dangerous quackery. But what is the value or meaning of such a degree if an individual repudiates nearly all of what they have been taught? A vet who explicitly rejects the basic principles of science and the core therapies of veterinary medicine is no less a threat to the public than a homeopath or other non-veterinarian who practices on animals without a formal veterinary education. In fact, such faux veterinarians are even more of a threat to veterinary patients because the public can be misled into believing they are practicing as legitimately medical practitioners.

The AVMA ethics guidelines also specifically prohibit deliberately defaming other veterinarians or deceiving the public in one’s capacity as a vet:

Veterinarians must not defame or injure the professional standing or reputation of other veterinarians in a false or misleading manner. Veterinarians must be honest and fair in their relations with others, and they shall not engage in fraud, misrepresentation, or deceit.

Yet the extreme alternative vets who base their practice entirely on dismissing mainstream medicine as useless and dangerous, and who claim to be part of this profession while rejecting its basic foundations are inherently defaming other vets and deceiving the public. What is the purpose, other than being able to make money from clients, of calling yourself a veterinarian while simultaneously denouncing the rest of the profession as greedy, negligent, and dangerous? Why should this be allowed?

Of course, even the majority of so-called “holistic” veterinarians make at least some concessions to science and scientific medical practices. I am not suggesting that merely offering untested on unproven therapies disqualifies one from serving as a licensed veterinarian. Even offering clear nonsense, such as homeopathy and “energy medicine” can be combined with otherwise acceptable patient care in an integrative model, though I believe the profession needs to do more to inform the public about the lack of value to such practices. But there are some pretty extreme voices in alternative veterinary medicine who reject the core values and practices of the profession, and it seems unfair to the public and the profession that these individuals can present themselves as licensed veterinarians on an equal basis with the rest of us. Here are a couple of examples of these most extreme voices in alternative veterinary medicine.

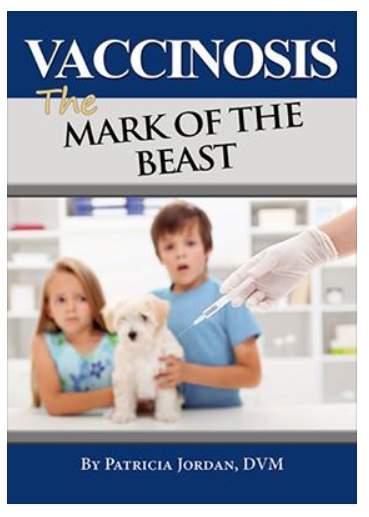

Patricia Jordan-

Dr. Jordan is one of the most vehement opponents of vaccination in the veterinary field. She is the owner and author of the web site and book Mark of the Beast Hidden in Plain Sight: The Case Against Vaccination. Here is a sample of the rhetoric from her site.

WE SHOULD REWRITE THE BOOKS OF MEDICINE TO REFLECT THE UNDERSTANDING THAT DISEASE HAS EVOLVED FROM THE VERY USE OF VACCINES.

NEVER SHOULD WE HAVE ALLOWED THE INNOCULATION OF POISON, THE GRAFTING OF MAN AND BEAST. NOW WE ALL CARRY THE SCAR, OF MEDICAL SUPERSTITION THE GENETIC PLAGUE OF INQUITY

The purpose of putting the Mark of the Beast together was to provide education for the reader or listener to a very important quest that apparently has been going on from the beginning of the illusion of time….conventional medicine [is] not the direct path to true healing and wellness…true health and wellness comes from a very natural setting and one from the relationship of the individual in balance with the earth and all of the treasures a healthy ecosystem has to offer…The important ingredient everyone also needs is right relationship with the other living organisms of the environment we share, respect for each other and the most holy relationship that of the one with the intelligence that designed this most wonderful system. Our fall from right relationship is as much responsible for disharmony and disease as is the turmoil the daily disturbance this imbalance maintains…Vaccines and drugs are at odds with the intelligence of the almighty design and getting back to the garden means getting back to the natural form…

Not only does she espouse absolutely insane ideas about the dangers of vaccination, she promotes bizarre conspiracy theories about cover-ups at the CDC and malign cabals of pharmaceutical companies hiding clear evidence of widespread harm to people and pets from vaccines, and she actively blames the veterinary profession for choosing profit over the welfare of patients.

THE PROFITABLE SECRET OF VACCINE INDUCED DISEASE

Brave veterinarians are speaking out, revealing veterinary malpractice committed through pharmaceutical pressure and greed. THIS visual documentation is the first on the horrors of VID.

DEATH BY VACCINOSIS

Patricia Jordan, DVM, CVA, CTCVH, & Herbology

Unforgettable photos, video and veterinary records prove widespread veterinary malpractice through unregulated over-vaccination.

Vaccines are criminal in what they are doing to our pets and our people….CRIMINAL

Listen to the parents, not the Pediatricians! Listen to the pet owners and not the vets!

Doctors that spend the time to find and promote health take more time in the exam room, and it doesn’t make financial sense. Doctors aren’t rewarded for the health of their patients; they are rewarded when their patients are sick and they need testing and medical intervention. And even the most idealistic and dedicated doctors arrive in the profession with large student loans to pay. Volume of patients, not vitality of patients will pay the bills

Doctors and veterinarians are not trained in nutrition because it will not help them financially. There is much more money in surgery and drugs. We learn our medicine in programs and teaching hospitals that are typically funded by those who have the most to gain financially: the drug companies.

Have heard good reports on my AVH [Academy of Veterinary Homeopathy] listserve about [pet] insurance. Of course you shouldn’t be getting any of the conventional poison in the first place and it would be highly doubtful you would ever need it anyway.

Bottom line, doctors get paid a lot of money to pander vaccines PERIOD.

[Bill Gates, specifically in his efforts to support avccination of poor children] is NO PHILANTHROPIST he is in fact the biggest money launderer for getting funds to the harmaceutical industry for which our government is intimately involved with profit sharing WAKE UP PEOPLE

Apparently the DEA also works for the FDA which works for the harmaceutical companies…….

Another whistleblower, another bit of truth that is being covered up by the CDC.. The government admits that live virus vaccines should not be administered to those with immune deficiencies. What they ***KNOW BUT FAIL TO DISCLOSE*** are the mechanisms of immunosuppression. It’s a cascade effect that leads to the diseases that are denied by the criminal CDC to protect profits instead of human life.

Of course the WHO is as conflict ridden as the rest of health care and governments who profit directly from it.

The vaccines are unsafe and they are unnecessary, they are also the FOUNDATION of conventional medicine which unfortunately is not to be confused with conventional “wisdom”. This is an example of how the system is built upon a foundation of “speculation” or unethical medicine that is now being questioned. Vaccines DO NOT confer herd immunity and they DO NOT confer individual immunity. They are however, the “golden calf” of the white coats that grows into the “sacred cow” of vaccine induced disease. What will they do when the public figures out they have been lied to for the purpose of “making a living by killing” by the pharmaceutical medical industrial complex that is only in operation due to government financial scaffolding and protection?

This level of vitriolic condemnation of the entire veterinary profession, human medical profession, and public health system would seem to disqualify one from being considered a doctor in any meaningful sense.

Will Falconer-

I’ve written about Dr. Falconer several times. He is a homeopath who is adamantly opposed to almost all vaccination, parasite prevention and treatment, antibiotics, conventional diets, and just about everything else that constitutes the practice of scientific veterinary medicine and animal care. As with Dr. Jordan, he not only practices the completely worthless nonsense that is homeopathy, he actively recommends it instead of conventional medicine, and his advertising of his practice if founded on denigrating the rest of the veterinary profession.

I put the antibiotics away for good when my own cat Cali, in trying to have her first kittens, did so out in the wilds of Haleakala on Maui, and came dragging herself in with a horribly infected uterus, leaking a foul smelling discharge, and clearly seriously ill. I knew even antibiotics would have a hard time helping her, but I also knew I had something deeply curative to offer now: homeopathic medicine.

Cali was treated with pyrogenium 30C, a remedy made from rotten beef…Ater a few doses of this remedy and a couple of uterine flushes with a bit of anti-infective Chinese herb (Yunnan Paiyao), Cali made a full and remarkable recovery. It was as though she’d never been sick. I had an “Ah-ha!” moment, and tossed my antibiotics in the trash.

The best that conventional medicine can do with chronic disease is to control symptoms through suppressive therapies. This is fraught with problems, including side effects from the drugs, and apparently “new,” more serious diseases arising from the continued course of suppression.

Heartworm prevention kills dogs?

I never knew that when they first came out with the heartworm drugs, back in the 80’s. I learned it when I left conventional practice and started to dig into what can hurt your dog, make her ill, and even kill her…It turns out that the drugs commonly pushed on you as “heartworm prevention,” carry the risk of autoimmune disease.

The Top Five Ways to Healthy Pets

Here are the five things that will have the greatest impact in keeping your animal vital, healthy, and living a long, joyful life with you.

(doing the opposite has been the biggest predictor of illness and dying too soon that I’ve seen in my 30+ years of practice)

- Stop Vaccinating Them.

- Feed Them What Their Ancestors Ate.

- Stop Using Pesticides to Kill Fleas.

- Stop Using Poisons for Heartworm Prevention.

- Give Them Raw Bones (for the whitest teeth and freshest breath ever).

Imagine avoiding risky vaccinations while getting very strong immune protection against parvo and distemper, the two potentially deadly diseases of puppies.

You know vaccinations are grossly over provided in our broken system of veterinary medicine. The pushing of vaccinations by Dr. WhiteCoat throughout your animal’s life doesn’t add to her immunity…And you know that vaccines are harmful. Chronic disease often follows vaccination, even a single vaccination.

The model of disease prevention put forth by conventional veterinarians is fundamentally flawed. It is in fact damaging the animals whose owners partake in it.

This broken model of disease “prevention” will never change from Dr. WhiteCoat’s side, who sells it:

-

He refuses to see the possibility of it causing harm.

-

He’s comfortable in it; change loses to maintaining the status quo.

-

He profits from providing it and profits again from the disease it causes.

Charles Loops-

Dr.Loops, another homeopathy, is an example of the alternative medicine convert. After practicing conventional medicine initially, he has decided that science-based medicine is worthless, and he uses homeopathy almost exclusively, even for fatal diseases such as cancer. In what sense is he a veterinarian rather than simply a homeopath? What does it matter if he has a veterinary degree if he has repudiated everything he learned in getting it? Should the public know that he has chosen to practice magic instead of medicine, and should he still be able to legally practice as a veterinarian?

Veterinarians and animal guardians have to come to realise that they are not protecting animals from disease by annual vaccinations, but in fact, are destroying the health and immune systems of these same animals they love and care for. Homeopathic veterinarians and other holistic practitioners have maintained for some time that vaccinations do more harm than they provide benefits.

My practice is mainly by referral and 95% by telephone consultation. I have treated thousands of cases using the principles of classical homeopathy and I continue to find this system of gentle healing to be the most effective therapy that has ever existed.

Sixty percent of my new cases have cancer and most of these several hundred companions each year survive longer and have a better quality of life than cancer patients treated with Western medicine or other modalities…Having practiced 32 years as a veterinarian, ten with Western medicine and over twenty with homeopathy, there is little doubt about which is the more effective system and which has the most curative approach to disease. The side-effects of homeopathic treatment are improved, overall health and a heightened sense of well-being; side-effects not typically found with Western medicine.

Jenifer Preston-

Another example of such a convert from veterinary medicine to anti-medicine is Dr. Jenifer Preston. She too bases her approach on blaming the rest of the veterinary profession for nearly all the illnesses pets suffer from. So again, how is she a veterinarian rather than just a homeopath?

Dr. Preston practiced allopathic medicine for twenty five years before realizing that the vaccinations and drugs she dispensed daily were causing more problems than they ever solved and often to a more severe degree…The drugs prescribed every day were literally destroying healthy organs and shortening lives.

Over the years, drugs and vaccines have made our pets, our beloved companions, seriously sicker and have shortened their natural life span. Why do we so often see premature aging? How do we STOP this trend? Treat holistically!

Epilepsy in dogs and cats can develop at any age. Allopathic veterinarians do not give you any real reason that this develops in your beloved dog or cat.

What the vets don’t realize is that they themselves have very likely created this syndrome with vaccines. Yearly administration of multi-valent vaccines assault the animal’s immune system over and over. More and more animals are developing ‘auto-immune’ diseases and the allopathic community has no idea why.

A majority of diseases plaguing dogs, cats, and horses today are what is termed auto-immune syndromes. This means that your companions have had their whole immune system severely compromised, so that their body cannot naturally maintain optimum health. Nature’s defense, so cleverly installed in every mammal, has been dismantled. What are the obvious culprits here?? The use of VACCINES and DRUGS over and over and over.

Do you know that EVERY drug has at least one side effect–many very serious or fatal?

Do you know that animal vaccines always contain mercury, formaldehyde and/or aluminum?

Do you know that animal insecticides are not only poisoning your pet but poisoning our planet?

Over the years, drugs and vaccines have made our pets, our beloved companions, seriously sicker and have shortened their natural life span. Why do we so often see premature aging? How do we STOP this trend? Treat holistically! Naturopathic veterinarians have found that these alternative products are accepted so much easier by the animal’s body and therapy is so much quicker and more complete!

Al Plechner-

Of course, Dr. Plechner has provided material for some of the most read articles and most virulent hate mail on this blog. He is an odd duck in that his practices fit under neither mainstream nor typical alternative medical systems. Basically, he has invented his own cause of all disease and his own treatment for all disease, utilizing conventional tests and medications in completely idiosyncratic ways. There is no scientific legitimacy to his practices, merely his opinion and those anecdotes he chooses to promote. And I have heard not only from pet owners whose animals have been harmed by him, but also from veterinarians who have had to treat patients injured by his practices. These veterinarians have been unwilling to tell the public about their experiences out of a desire to avoid conflict and a sense of loyalty to the profession. This sense is clearly one Dr. Plechner doesn’t share, as he promotes his practices, once again, on the basis of condemning mainstream veterinary medicine as greedy and ineffective.

profits made by all of the cancer treatment drugs and the associated services involved in treating cancer. Sad to say, the treatment of cancer has proven itself to be, a tremendously successful revenue builder. Why wouldn’t you keep a possible cure under wraps?

But of course, this is purely a hypothetical question. We couldn’t possibly believe that our medical institutions could be callously driven by the pursuit of profit. Why, they’re as ethical as our great financial institutions are and look at how successful they’ve been.

The frightening fact is that a cancer cure could prove to be financially disastrous to the pharmaceutical and all of the other dependent medical industries.

“HE WHO PAYS THE PIPER, CALLS THE TUNE”

Much of so called ‘science’ operates on this basis.

And most of the medical/ big pharma colluded industry has a cozy little relationship with government, msm and the educational institutes to boot.

We should class them as ‘Disease Care’ providers, and not Health Care!

Tom Lonsdale-

Dr. Lonsdale is a promoter of raw diets. This in itself is not unusual. However, his obsession with this topic has led him to a broad rejection of nearly every aspect of science-based veterinary medicine, and a barrage of accusations characterizing the veterinary profession as fundamentally corrupt and intentionally harmful to animal health.

He has called the move towards more evidence-based practice “laying smoke screens, rearranging the deck chairs on the sinking ship and fiddling whilst the pets crash and burn.”

There’s a creeping realisation that much veterinary ‘evidence’ fails the scientific test. Over-servicing based on dubious interpretations of the evidence — or suppression of the evidence — is commonplace. Needless vaccinations against non-existent diseases prop up the veterinary economy.

Pick up any veterinary publication and you’ll see big pharma exert control and invoke their interpretations of the ‘evidence’. Of greater concern, junk pet-food makers enjoy special relationships with veterinary regulators, schools, associations, researchers, suppliers and practitioners.

Defenders of the system employ dodgy assumptions and defective logic. Little or no consideration is given to subjective assessments, the basis of most decisions by clients and clinicians, or the complex interconnectivity of our world. Vets pursue the reductionist, treatment and germ theory paradigms with varying degrees of commitment and expertise, but seldom or never consider the limitations of those belief systems.

Why there is an alliance between junk pet food makers (‘barfers’ included), many veterinarians and fake animal welfare groups designed to keep pet owners confused and in the dark?

See how incompetence and maladministration characterise the veterinary endeavour.

The situation is grim and starts with the veterinary profession’s inattention to detail. Whilst it is obvious to most folks…that junk foods are bad for health the veterinary profession appears to have been too busy to notice. Once pointed out, the fact that an artificial diet fed monotonously either directly or indirectly poisons animals, the profession should have risen up and acted. Instead the professional ethic ruled that a mass cover up should apply. With the cover up safely in place profits were to be made. Increasingly elaborate ploys are now used in persuading the populace to a. keep more animals and b. feed them high priced artificial concoctions.

It is my belief that the profession’s political mismanagement and acquiescence is matched by a naive scientific methodology… Our way out of the mire is via a holistic assessment…. Since the holistic approach is not usually taught or practised, here are a few tips which may be of help. Firstly, make sure to have fun. There are no columns of meaningless figures in this approach nor disembodied dry facts.

Bottom Line

The purpose of regulating veterinary medicine, licensing veterinarians, and establishing even the minimal standards of practice and ethics that exists is to protect the public. People should be able to expect that a licensed vet not only has an education in the principles of science and science-based medicine but is prepared to utilize them in patient care. While there is great space for individual judgment and variation in exactly how we treat patients, it is deceptive to the public, unfair to other veterinarians, and dangerous to patients to allow vets who actively repudiate the core principles of science and scientific medicine, defame the rest of the profession, and offer only treatments that are untested or directly incompatible with science to practice as if they were legitimate doctors of veterinary medicine. If they wish to abandon the profession and its values and methods, then they ought to give up the rights and privileges of membership and not present themselves as veterinarians but as homeopaths, herbalists, Plechnerists, or whatever other type of alternative practitioner fits their ideology.