I write regularly about the American Holistic Veterinary Medical Association (AHVMA) and its fundraising arm the American Holistic Veterinary Medicine Foundation (AHVMF). These organizations are very much the tip of the spear for pseudoscience in veterinary medicine. Unfortunately, despite peddling ideas which are often fundamentally incompatible with well-established science and with the evidence of history that science is the best way to understand the world and improve health, these organizations are remarkably effective at pretending to have a genuine interest in scientific research of alternative therapies, raising large sums of money (AHVMA and AHVMF financial statements), and then using that money and that superficial appearance of accepting the importance of science to infiltrate academic institutions and veterinary organizations.

From time to time, the leaders of these organizations share their true feelings about science and science-based medicine, and I feel it is useful to draw attention to those so that, hopefully, the truth beneath the façade will be visible and both veterinarians and pet owners can make fully informed judgments about the intentions and agendas of these groups.

In a recent interview in Natural News, a well-known platform for promoting the most egregious anti-science quackery, the president of the AHVMF, Dr. Barbara Royal, presented an honest and quite bizarre characterization of science-based veterinary medicine.

Dr. Barbara Royal, president elect of the American Holistic Veterinary Medical Foundation, believes that mainstream American physicians and veterinarians are only educated in one way.

“MDs and DVMs learn a lot about surgery, a lot about medications, a lot about disease…but they don’t learn about the causes of health.”

Royal believes in an integrative approach to health, NOT interventionist medicine. She strives to focus on supporting pet health as a first priority instead of treating disease after it has developed. This philosophy is challenging the status quo. Today’s mainstream veterinary students are taught to treat pets with an approach emphasizing drugs and surgery.

Throwing a drug at the problem often leaves pets with side effects and an impending death. The philosophy of, “take this drug, we don’t really know how it works, good luck,” is a symptom cover-up approach that reaps more consequences in the end. Although mainstream veterinarians mean well and want to help, their philosophy – the way they were taught – is a patch work that ultimately leads to unintended consequences.

The clichés that conventional medicine treats only symptoms without any interest in the underlying causes of disease, that preventative healthcare is ignored by conventional veterinarians, and that science-based medicine ignores all interventions except surgery and pharmaceuticals are often repeated by proponents of alternative approaches despite being obviously and manifestly untrue.

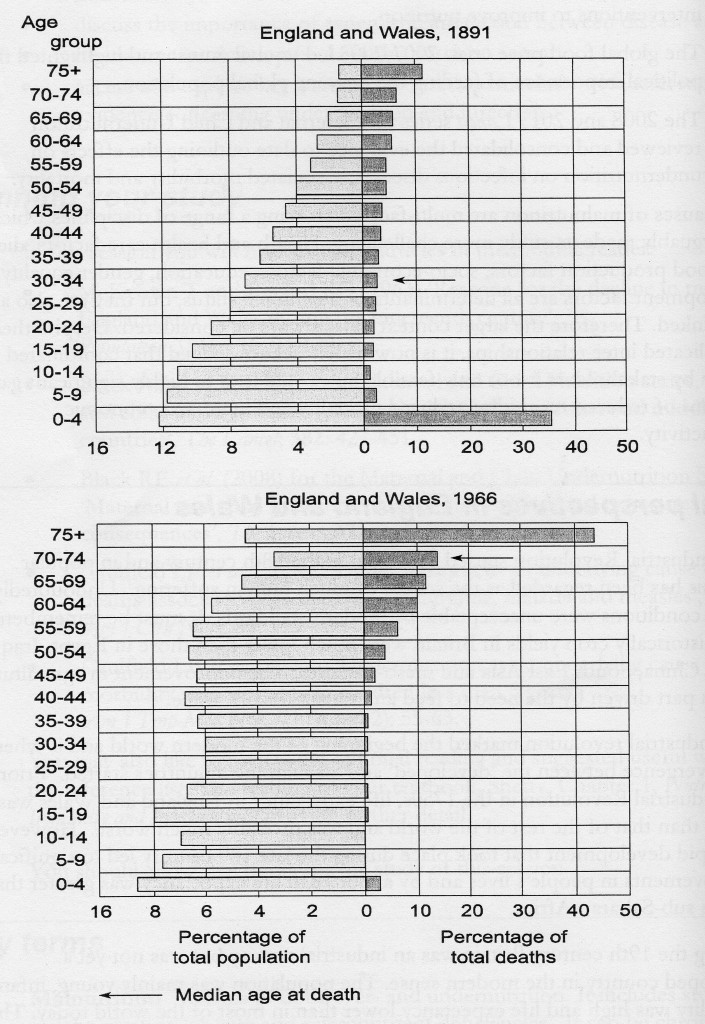

Dr. Royal offers a few tidbits from the alternative perspective the AHVMF advocates, which include further clichés, such as blaming mysterious toxins, conventional diets, vaccines, and of course medicines (ah, I mean “drugs”) for illness and suggesting that without the products of science-based medicine our pets would be living natural, healthier lives. It is hard to imagine anyone making such an implication with a straight face given the overwhelming evidence that human health and longevity has improved dramatically since the advent of the scientific approach to a degree unimaginable during the thousands of years of pre-scientific approaches to health that sound very much like the “natural” approach the AHVMF advocates, complete with ancient healthcare strategies based on pseudoscience and mysticism like Traditional Chinese Medicine, Herbal Medicine, Acupuncture, and so on. (See the graphs below for a couple of illustrations of this well-documented history)**

She encourages pet owners to eliminate corn and wheat from their pet’s diets, because these are pro-inflammatory, unfamiliar ingredients to an animal’s body. She teaches about pet food processing methods that create heterocyclic amines and acrylamides, which are potent carcinogens.

She talks about concepts like the lack of moisture content in kibbles which lead to dehydration in the animal. She talks about the amount of glycotoxins in dog food that cause inflammation in pets.

Royal also warns against overdoing those things that could cause harm. She teaches not to over medicate or over-vaccinate a pet, teaching how this interferes with an animal’s natural ability to heal.

Like other leaders of these organizations, Dr. Royal pays lip service to the value of science when speaking to those who truly do recognize the importance of the scientific approach, but when speaking with fellow believers in alternative therapies, she makes no reference to scientific evidence at all but is quick to offer the well-respected testimonial/anecdote as validation for the preceding rejection of science-based medicine and its philosophy and methods:

On the inspiring stories page of the AHVMF page, one can find many testimonials of pets cured through holistic means.

Despite being psychologically compelling, such miracle stories are not a reliable way to evaluate whether a healthcare intervention is safe and effective. But they are very effective as a marketing tool, which ultimately matters more to the AHVMA and AHVMF that inconveniences such as what is true.

The fact that Dr. Royal and others in these organizations have a deep, genuine faith in their methods is not in itself objectionable. What is disturbing, and dangerous for our patients, is that they seem able to obscure the fundamental rejection of science and science-based medicine at the core of their ideology. The fact that an organization run by individuals who blithely mischaracterize conventional medicine, as Dr. Royal does in this article, and who reject the basic philosophy or the need for science can be welcomed as an affiliate within the American Veterinary Medical Association and as a source of funding for research and educational activities at schools of veterinary medicine is bizarre and disturbing. The growing influence of these organizations does not bode well for the future of veterinary medicine as a profession or, more importantly, for the continued progress in healthcare that benefits our patients.

** Here is a chart which reminds us that before the 20th century, and the advances in agriculture, sanitation, and medicine brought about by the accumulating scientific knowledge of the preceding two centuries, human life was short and few children could count on living to adulthood, much less a ripe old age. This pattern had remained unchanged for thousands of years despite the “ancient wisdom” of trial-and-error folk medicine now advocated by the AHVMA and AHVMF. The chart below, while more speculative that the one above, illustrates this based on all the evidence currently available.

Here is a chart which reminds us that before the 20th century, and the advances in agriculture, sanitation, and medicine brought about by the accumulating scientific knowledge of the preceding two centuries, human life was short and few children could count on living to adulthood, much less a ripe old age. This pattern had remained unchanged for thousands of years despite the “ancient wisdom” of trial-and-error folk medicine now advocated by the AHVMA and AHVMF. The chart below, while more speculative that the one above, illustrates this based on all the evidence currently available.

This is the reality the AHVMA is so eager to ignore in favor of a naïve image of a Golden Age of health and well-being prior to the development of science and technology. No one can deny that the fruits of science have not always been benign or wisely used. But denying that overall human life is longer and healthier thanks to science than it ever was during the millennia we relied on more “natural” methods is irrational and dangerous.